Common questions are: How Accurate Is GPTZero? Can GPT-Zero really distinguish between texts produced by AIs and texts produced by humans? Following a deeper drill into the performance of this model, I can tell that GPT-Zero performs quite well – it is about 80% correct, but continues to fail much AI- generated things.

It is fairly adept at identifying human writing, and it will very seldom label a person’s work “AI” . But it is not as adept at identifying when machines are generating the writing. In short, it is helpful, but should be viewed critically.

Where GPTZero differs is in its low false positive rate. It very rarely misinterprets human writing for A.I. and that is comforting as well. On the other hand, it does allow AI-generated text to pass as “human” in some cases. Exactly this is what makes GPTZero useful but not to be fully trusted.

GPTZero Review: Is it Any Good?

How GPTZero Detects AI-Generated Text

Here is how that actually works with GPTZero. It examines text patterns and use machine learning in differentiating between human writing and AI output.

The system does follow a series of factors in varying forms of writing, for example, student essays, in order to render its decision. It isn’t magic but it is pretty clever all the same.

Core Detection Metrics: Perplexity and Burstiness

Perplexity, one of GPTZero’s main tricks. This is kinda just how predictable the words are, from the perspective of language models. A lower perplexity indicates a more formulaic writing style – often a sign that the text has been generated by an algorithm.

Then there is burstiness. This examines how sporadic the sentence structure and vocabularies are. Human writing is more variable; AI is smoother and more consistent. GPTZero searches for that absence of variation as a signal.

If you combine perplexity and burstiness it starts to make sense. Perplexity doesn’t explicitly tell you the obviousness of the word, and burstiness does not explicitly tell you whether the sentences “sound” diverse naturally. When one thing is not enough, GPTZero’s two-pronged approach can do a better job at identifying AI-text than just one approach can.

Machine Learning Classifier Approach

GPTZero trains machine learning classifiers against large corpora of both human and AI generated text. It then uses those patterns to compare to new text and guess whether it’s AI or not.

The methodology is improving as it continues to learn from examples. It then cases a confidence score which indicates how confident it is of the provenance of the text.

It is the sense in which GPTZero is “learning” that allows it to follow along with new AI practices, that increasingly resemble belief and desire to humans. I suppose that’s why it’s convenient for teachers and editors who periodically need to proofread essays and reports.

Content Types Analyzed by GPTZero

GPTZero is good for all types of writing, but is particularly optimized for academic and professional writing. It also grades student essays, since this would no doubt be the primary target for the assistance of AI.

It can handle both short paragraphs of around 40-100 words as well as longer essays up to 800 words. Truthfully, it’s better at the longer pieces just because of the information it has to run off of .

It can read emails, reports, online posts, etc. via GPTZero. Though obviously the accuracy will vary with the complexity and length of the text. It may get tripped up with short and simple samples, so I always warn against these results.

Measuring GPTZero’s Accuracy

I took a close look at how well GPTZero tells AI-generated text from human writing. Its performance swings a bit, depending on the context, the AI model, and the length of what you feed it.

Reported Accuracy Rates and Benchmarks

GPTZero scores between an experimental 80% and 98% accuracy. Under controlled conditions with clear AI content, it can go up to a 98% . It seems most successful in academic papers and more formal essays, likely due to the formulaic nature of such writing.

In the real world, this drops to ~ 85-90%, because writing styles are all over the map. Thus, GPTZero is able to minimize its false positives at around 1-2%, meaning it very infrequently identifies a human as “AI.” False negatives are higher, perhaps as high as 17% and thus some AI is not screened.

As a trade off, it’s fairly good at grabbing obvious AI, but not perfect.

Accuracy With Different AI Models

Its detection rates will vary depending on the AI model. GPTZero performs best with GPT-3, GPT-3.5, and GPT-4, with detection rates over 90%.

If you’re working with more recent or relatively obscure models of textual generation, such as Claude or Bard, the level of accuracy takes a slight hit. These sound more natural, and are thus harder for GPTZero to identify as unnatural.

They continue to update GPTZero to catch new AI patterns, but heavily edited AI text or just good AI will still get through.

Impact of Text Length and Complexity

Text length is important – it matters a lot. The more text provided by the longer essays the more supporting cues GPTZero receives, so the accuracy triples to get to 95-98 %. Really short stuff, like tweets or quick messages, is a lot harder to place.

Complexity too, makes a difference. GPTZero’s metrics function better with formal writing that have mixed up sentence lengths. It can be screwed up by creative/idiomatic writing, or paraphrased text.

Again, just to exemplify this point, if you tweak editing in AI-generated text to make it sound more “human,” GPTZero’s accuracy plummets even more.

False Positives and False Negatives in GPTZero

Sometimes GPTZero gets it wrong, either by calling a human’s work “AI” or missing AI-written content. These mistakes usually come down to the patterns, length, and style of the text.

What Causes False Positives

False positives are when GPTZero designates human writing so as AI. This typically occurs with simple, well- structured writing that resembles something a machine might spit out. Those student papers that contain simplistic or “standard” language are particularly at risk.

The more the text is repetitive or the simpler is its syntax, the more liable the document is to become flagged. That is a bit of a headache for teachers who only use GPTZero.

Short or ultra-simple messages also confuse GPTZero as to what is what, so be wary .

Understanding False Negatives

False negatives are the instances where GPTZero does not catch any AI- generated text and believes it is generated by a human instead. This is more pronounced with AI writing that strongly resembles natural styles and is generic and formulaic.

If what ChatGPT writes is something casual or chatty, GPTZero will have more difficulty to capture it. It is better at catching AI in technical or academic writing, because machine-like patterns tend to be followed more there.

This is a real concern in the realm of education, for example, where students may employ AI to complete assignments only to be able to take them to be graded.

False Positive and Negative Rates

From what I have observed among various tests, GPTZero’s false positive rate seems to be around 10%. In other words, one out of ten human texts are being mistakenly identified as generated by AI. The rate of failed or missed AI texts is a bit lower at an approximate 5% false negative rate.

| Error Type | Approximate Rate |

|---|---|

| False Positives | 10% |

| False Negatives | 5% |

These numbers show GPTZero does a decent job, but you shouldn’t use it as your only tool. The risk of false positives is real, so always double-check.

Comparing GPTZero With Other AI Detection Tools

I’ve spent time comparing GPTZero’s accuracy to other big-name AI detectors. Each tool has its own quirks, features, and pricing, and some are better suited to certain jobs than others.

GPTZero vs Originality.ai

Overall Originality.ai performs slightly better than GPTZero in detecting paraphrased or highly edited AI content. It advertises up to 99% accuracy and less than 1% false positives in the ‘Lite’ model. GPTZero is not far behind either with some 98% of good AI text, but stumbles to 85-95% of paraphrased stuff.

Originality.ai also seems to only have English and the very large AI models, but it supports 15 languages. Originality.ai adds other additional features like checks for plagiarism, fact and readability scores.

GPTZero is cheaper and has much more flexible pricing. It is particularly strong in an academic environment, where it can achieve up to 96% accuracy in essays. Meaning it’s a good choice for an educator or student who is on a budget.

| Feature | Originality.ai | GPTZero |

|---|---|---|

| Accuracy | Up to 99% | Around 98% |

| Paraphrase Detection | Strong | Moderate |

| Language Support | 15 languages | Limited |

| Additional Tools | Plagiarism, fact-checking | Academic-focused tools |

| Pricing | No free tier, requires card | Tiered plans, affordable |

GPTZero vs Copyleaks

Copyleaks is known for dovetailing AI detection with plagiarism checks, and is widely used by teachers and publishers. It relies on the use of AI models to detect machine generated writing, as well as to find plagiarism from online sources.

Copyleaks works with learning management systems and supports more types of content than GPTZero . Unfortunately, it’s more expensive and doesn’t cater to metrics such as perplexity and burstiness, which are GPTZero’s main selling points.

My preference is GPTZero which I also find more direct and quicker for academic checks. Copyleaks is superior if you are looking for an all-in-one tool for plagiarism and AI detection.

GPTZero vs Winston AI

Winston AI is a newer entrant that leverages cutting edge algorithms to detect AI content. That score is in the range of GPTZero and comparable, specifically for an easy AI text. Winston also goes great lengths to maintain a low level of false positives, and this is a good thing when dealing with sensitive issues.

This is where the big differentiation in user experience and cost comes. Winston AI looks simple and fast but the free tier is restricted . GPTZero has more flexible pricing and features batch processing for researchers.

Both support all the big models like GPT-3 and GPT-4, but when you look at GPTZero’s specialization on academic tracking, schools and universities have fewer reasons to not consider it.

GPTZero vs Turnitin

Turnitin is the go-to platform in education for plagiarism checks and it has now incorporated AI detection. It uses text-matching along with behavioral analysis of AI to detect machine-written labor.

The strength of Turnitin is that it is complementary to other plagiarism technology. But, its AI detection can be somewhat murky, and it generally requires an institutional subscription.

GPTZero is more focused on AI detection and provides clear writing style metrics. It is less costly for folks as well, as Turnitin is typically part of larger contracts.

For both plagiarism and an AI check in a large academic environment, Turnitin is probably the ideal answer. GPTZero is likely a better match for smaller users or users that work only on AI.

Strengths and Limitations of GPTZero

GPTZero does a solid job in some areas but definitely has its weak spots. It’s great at catching straightforward AI-generated text, but struggles with more complex or heavily edited writing.

Advantages in Academic and Professional Settings

It works best, in my opinion, for academic papers and other more formal types of professional writing. It also identifies, through perplexity and burstiness, the ‘normal’ patterns that are more likely to be employed by AI as opposed to the ‘weird’, di` and diverse forms used by humans .

In a formal tests, in these conditions the accuracy can reach over 90% with almost no false positive. For teachers and employers, that translates to a lower likelihood of false accusations and a more realistic chance of identifying blatant AI utilization.

Challenges With Paraphrased and Hybrid Content

If the AI-authored text is then heavily edited, or combined with human authored text, the tool becomes less accurate. In general, when it comes to paraphrasing and rewriting GPTZero detection rate usually falls somewhere between 60-70%.

This is because the tool relies on identifying patterns of consistent AI behavior. Once text is changed, these patterns are lost and difficult to discern.

The most confusion is generated by hybrid documents that contain information that is authored jointly by humans and AI. These mixed signals can cause detection errors, false p n , or false n p .

As people become more creative in attempting to hide AI generated material, GPTZero’s lack of context becomes increasingly apparent.

Handling Short Texts and Non-Native Writing

Would short texts obtain this? Those are a headache for GPTZero . Since we now have less data to look at, perplexity and burstiness become meaningless and predictions become unstable.

It simply does not work as well when applied to shorter samples in which writing patterns are more conspicuous.

Non-native English writers can be mistakenly flagged. It cannot understand their more idiosyncratic phrases or language permutations.

It is because of this that with formulaic or structured writing, the false positives increase. That is why it is important to contextualize this content so that we are not misinterpreting what different users are posting.

Best Practices for Using GPTZero Effectively

Getting the most out of GPTZero means understanding its results and knowing how to use its feedback. I pay attention to how it explains its findings, and I adjust my approach to checking texts based on what it shows me.

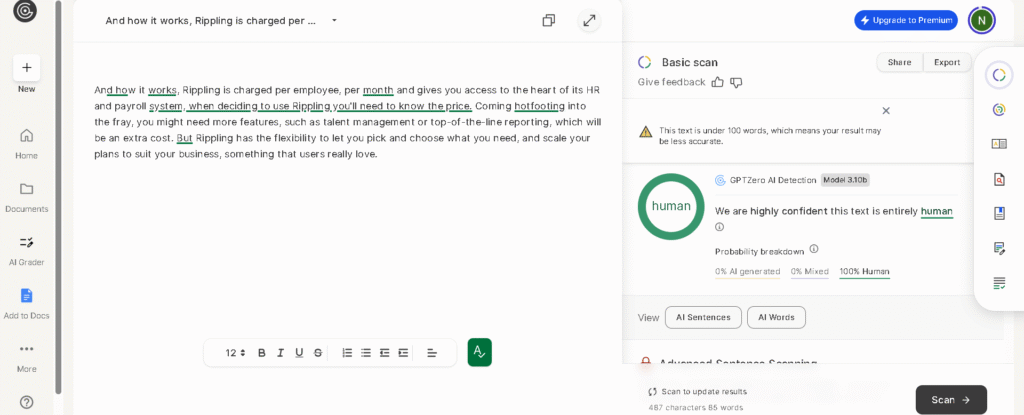

Interpreting GPTZero’s Results

GPTZero will tell you the percentage chance that it believes the content mysteriously produced by its algorithms looks “natural,” but it’s never a guarantee. I look at it as a likelihood, not a sentence.

It could mistakenly mark human writing on AIs as AIs (false positives), or fail to detect altered AI text (false negatives). I enjoy the explanation feature where you can find out what in your text was causing you to be suspicious.

Those highlights allow me to judge if the prediction makes sense for the context. I never base a decision on just the test; I integrate the GPTZero score along with my opinion and other tests before making a decision.

Enhancing Detection Reliability

The longer and more complete the text is the more reliable the detection. In the war on short snippets to long contexts GPTZero also seems to fail, as it has less to work with.

I prefer complete to partial samples. Whether in the updated versions of GPTZero also has a relevance, since the capacity of the tool itself also grows with the evolution of AI writing.

I generally supplement GPTZero with a manual review in order to correct errors. Each tool is limited, and it’s good to remember that.

Offering Writing Feedback

GPTZero is useful for more than just detection – I use it for writing feedback. When I scan writing, it identifies A.I.-sounding wordings or patterns that are clunky.

This also allows me to make recommendations on how authors can sound more authentic and natural. This is useful feedback that can help tighten the style and credibility of one’s work.

Ultimately, GPTZero enables honest writing. This isn’t just about getting it to catch other uses of AI – it’s also about making the writing better.

Frequently Asked Questions

I’ve dug into how well GPTZero spots AI-generated text and found details on its accuracy, how it compares to other tools, new features, privacy, and its limitations. Here’s what stands out and where you might want to watch your step.

What is the accuracy rate of GPTZero when detecting AI-generated text?

Its “accuracy” really depends on the situation in which GPTZero is being used. It is able to correctly identify A.I. generated text with 80% to 98% accuracy.

Its performance is better when dealing with more formal or academic types of writing. When that AI content is highly edited or paraphrased, the number can dip to 60-70%.

Can GPTZero reliably distinguish between human and AI-authored content?

GPTZero introduces concepts like perplexity and burstiness to distinguish AI from human writing. It is solid when faced with clear AI output, but it can’t distinguish between technical or formulaic human writing and AI at a times.

It also fails to pick up on some AI texts which have been heavily edited by humans.

Are there any documented comparisons of GPTZero’s accuracy to other plagiarism detection tools?

Yeah, intermediate with respect to all the others, GPTZero. Applications such as Originality. AI can get 99% accuracy and especially with more advanced AI generated content.

While other options may compete with, or be for, large organizations or have “specialized” features, GPTZero is strong with detection and the price point.

What are the latest improvements in GPTZero’s detection algorithms as of 2025?

GPTZero re-trains their machine learning models every month. These advances assist it in incorporating new AI writing techniques and tweaking how it recognizes difficult patterns.

The program also adapts to new AI in how the user interacts with the tool and provides feedback. But, not every problem of discernment is resolved.

How does GPTZero ensure the privacy and safety of the documents it analyzes?

According to GPTZero it does not store or save texts post detection and ensures user data is safe . They transmit using normal encryption and abide by best practices in terms of protecting content.

Even so, I would be cautious about uploading particularly sensitive or confidential documents.

What are the limitations of using GPTZero for AI content detection?

That said, GPTZero’s false negative rate is not inconsequential; it is 17%. At times it will even identify human writing as AI, particularly when the text is technical or a little formulaic.

Its sensitivity is of about 65%. Most AI content gets caught by it, but not all.

To be honest, I wouldn’t trust it entirely – paired with human review is better.