AI detection technology is continually improving, to the dismay of writers and content creators who use AI-generated text. This is where AI humanizer tools come in – they transform the text to sound more natural, as if a real person wrote it, all the while allowing you to successfully avoid irritating detection flags while maintaining the content of your ideas.

If you have some original ideas of your own and are drawing from real content and context, rather than doing it to pull the wool over people’s eyes or to condescend to the institution that is making you do such works, then let’s face it – these work well.

The perspective of the backdoor is misleading- there is just enough “you” in the backdoor to do the work; a bit of human ingenuity coupled with AI quickness . Though some who are skilled at it, will use a backdoor just to avoid punishment, but it’s really about that, and it is about protecting one’s honor.

Best AI Humanizer Tools-My Ultimate List

Understanding AI Detection Systems

They call this process AI detection, which involves scanning and determining if the writing is human or machinic. They scrutinize the sentence construction, the diction, the rhythm of the sentence. These detectors then hate your text and thus makes it a small headache for those of us trying to uphold authenticity and clarity in our writing and whose outputs are not producing false positives.

How AI Detectors Identify AI-Generated Text

AI detectors will look for specificities such as burstiness, or perplexity among other characteristics in order to detect AI created content. Burstiness is simply the variation in sentence length and tone.

Humans are basically good at this; we sort of naturally write mixed-up sentences, while AI will often come at you with a string of relatively uniform, boring sentences. Perplexity indicates how repetitive or predictable your word choices are over the course of the text.

There is a slightly formulaic quality to writing with AI as it relies heavily on making use of particular, commonly paired words. The kind of models like GPT or Gemini that these systems aim at do not “understand” what you are saying in any meaningful sense.

| Signalistic | Dimensions/focus of measurement: | An Example that can raise flags. |

|---|---|---|

| Burstiness. | Diversity of sentence | Sentences are too slick |

| Perplexity: | Word choices predictable | Repetitious or formal tone |

Common Challenges with AI-Generated Content

The writing that comes out of these approaches is often too frictionless, with no hiccups or pauses to make it feel alive. The effect of this is that there are many false positives – legitimate human work that is not spam, but the filters mistakenly intercept. Academic or heavily edited writing is even more susceptible, since deviations in grammar and structure are preserved. For instance, there might be something obvious to readers in the regularity of the keywords themselves – e.g., SEO copy that uses the identical keyword as a recurring refrain. However, writers dependent on AI of course must beware of watering it and “tripping” the Ai detectors – or, to put it another way, stay clean and polished, but not too clean or too polished. Varying sentence length and tone can also help to achieve less robotic sounding text.

Ethical Considerations and Intent

The goal, when using an AI humanizer, should not only be to bypass detection systems, but to get at the ‘real’ writer. The goal is not to obscure the fact that you are deploying AI, but also for your text to be authentic.

Bypass AI Detection Ethically: humans actually edit and create, while the AI helps. And this is what I have consistently done, in compliance with the obvious; academic integrity and copyright.

Humanizers shouldn’t be used to cheat or plagiarize, and as is frequently the case, people who do this ruin it for everyone else. Rather, use them as part of your fine-tuning, an insulating measure to prevent jointly sacrificing your credibility, and to help you to keep that human voice alive – even after you have come up on the positive side during AI checks.

What Are AI Humanizer Tools?

AI humanizer tools impart this human-like quality to the AI-generated writing. These include modified structure, vocabulary, and flow to published to help evade AI detection . They are basically utilities that do a particular thing, in this case altering the patterns of text, and much has been made of their ethical and legal uses.

Definition and Purpose

For the sake of AI humanizing is the simplifying process by which AI-generated content sounds as if a real person were writing it. What they strive to avoid are robotic phrases and such expected sentence patterns.

The accomplish this by mixing language, long sentences, and some shifts in style. Because AI writing can be picked up by these software programs, humanizers are useful in ensuring the writing is less sterile while still maintaining the initial meaning.

How Humanizer Tools Transform AI Content

These utilities replace regular AI language with wording that is more natural. They do create accessible vocabulary, simplify complex words, and throw in various sentence lengths and patterns.

A few note the modifications they do, and so you can see what’s been altered. Humanize AI Text and offerings such as Overchat AITools eliminate the redundancy and also incorporate a more conversational tone.

All but one can handle multiple languages and do both short blurbs to large documents. The main message usually remains, even with these alterations.

Bypass AI Detection Ethically– Use Cases

There are legitimate uses for AI humnanizer technologies . They are employed by educators and businesses as a way of preserving new content, when AI is the other side of an content interaction – perhaps you want your AI draft to be more like a human’s, or want to avoid privacy invasion.

However, it should not be as way of fooling, or cheating others or plagiarism.

This would also inform the ethical necessity to merging AI humanizers with real human editing; it includes your own experience and confirmation of info.

Even where writing is AI-assisted, transparency and due credit are still key.

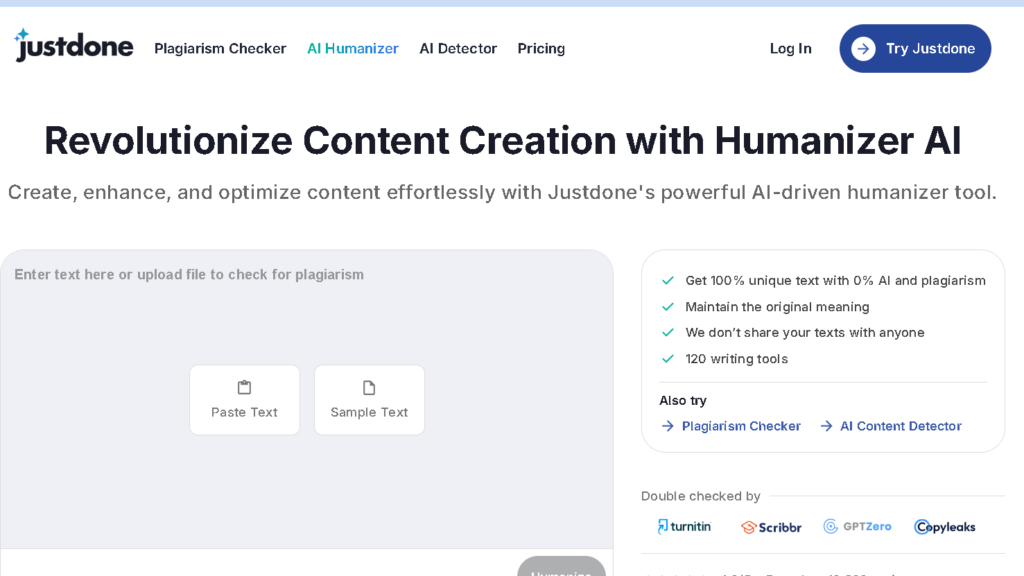

Top AI Humanizer Tools to Bypass Detection in 2025

However, only a minority of AI humanizer tools will be found to be the most ideal in 2025 simply for the reason that they are able to recode the AI text in a way that retains the “sound” of human natural language.

These tools lack the informatics of GPTZero and Turnitin, but are not free of tone or lucidity.

They probably have a tool depending on what you write – SEO content, academic work, whatever, that is for you .

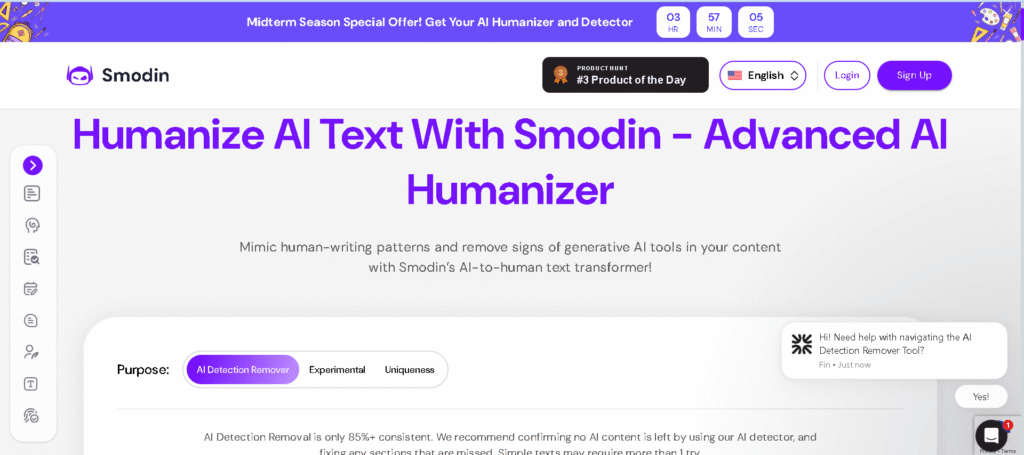

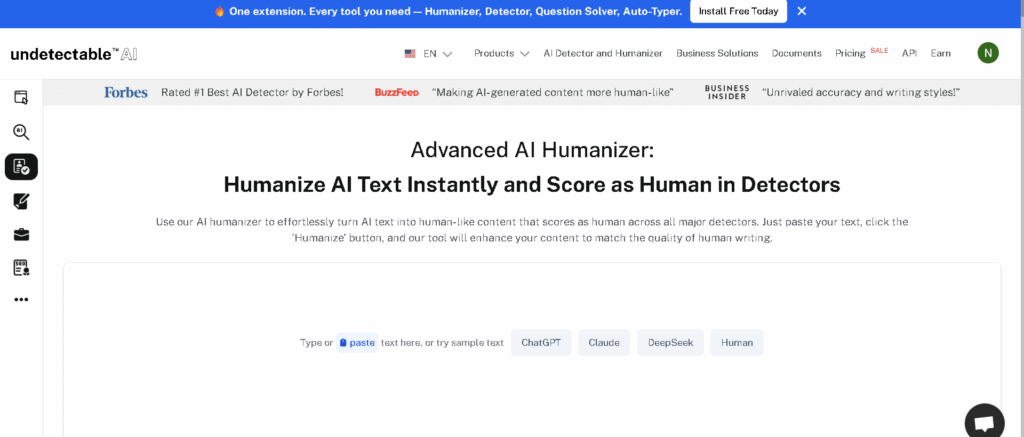

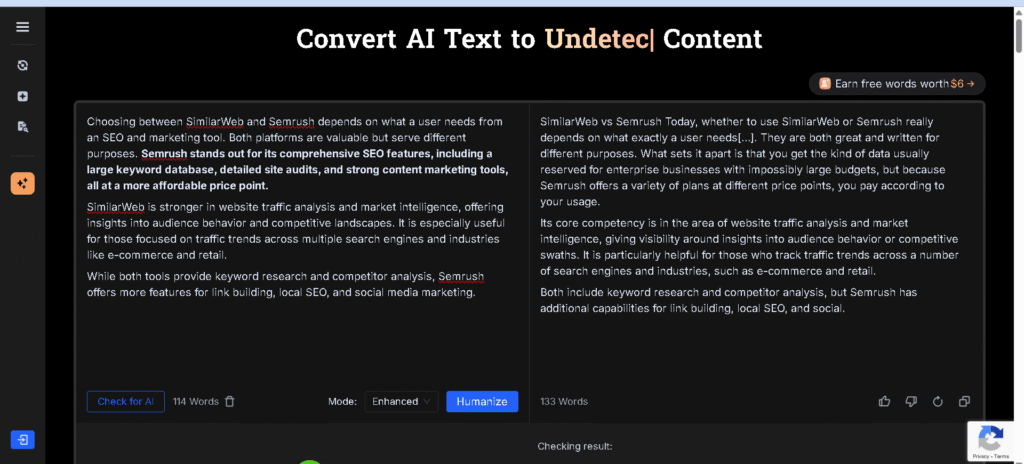

Undetectable AI

For Undetectable AI the experience of its own capabilities is that they “have a reputation for slipping by hard AI detectors” . It’s very capable of translating AI content into writing that reads like the writing of a human.

This tool adjusts sentence organization and word usage somewhat warily, as to produce non-repetitive robotic speech. It is ideal for writers that need write SEO- friendly and readable content.

Some users are disconcerted to find that they have low AI detection scores when checking multiple websites. It is popular for a reason – the trade off for correctness and sounding natural is acceptable, and as a blogger, marketer or professional you should probably use it.

StealthWriter and StealthGPT

The StealthWriter and StealthGPT are really for longer-form stuff- whitepapers, reports, in-depth guides.

They work very well for the types of text that make text dull – specifically they are very good at, in the context of highly constrained tools such as GPTZero, making text dull.

They keep main keywords and don’t compromise on readability, which is good if you work with SEO projects.

StealthGPT outputs relatively safe sentences, less likely to require significant post-editing for fluency.

It’s fairly user friendly and has a quick turnaround time, so it is a nice alternative for a major project.

Pricing is competitive and enables freelancers and business to take the plunge without breaking the bank.

HIX Bypass, WriteHuman, and BypassGPT

HIX Bypass, WriteHuman, and BypassGPT all address different issues, however every one is working to assist your AI text in detection evasion and human-sounding output.

- HIX Bypass has been purpose-built to be SEO friendly, leaving all your keywords intact while sliding under the radar of detection flags.

- WriteHuman is ideal for blogs or marketing, and its concern is to help you maintain your voice and tone.

- There are not a lot of options to fiddle with, which is great if all you want is a quick result.

Many of these tools have free or cheap versions so it that you don’t have to immediately break the bank.

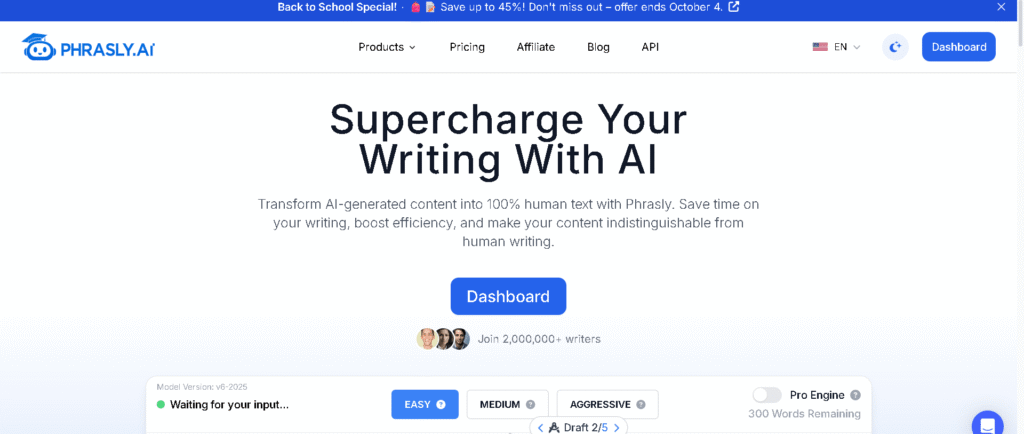

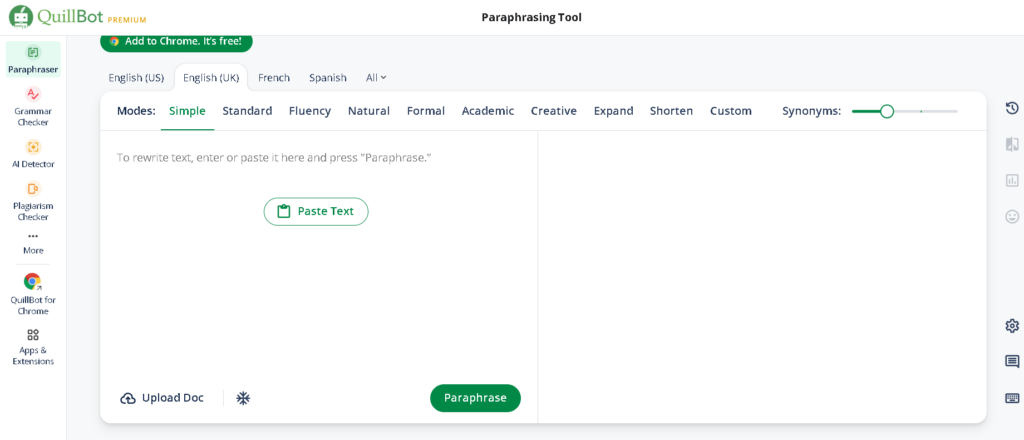

Quillbot, Phrasly, Humbot, and More

Popular among these, for their versatility beyond humanizing AI text, are Quillbot, Phrasly and Humbot.

- I like to use Quillbot for paraphrasing and rewriting, but be prepared to make a few manual edits for optimal results.

- Phrasly employs sophisticated sentence paraphrasing for improved fluency and naturalness of the generated text.

- For informal projects, humbot is a good free tool with decent bypass rates.

These are good, and cheaper, alternatives to the other more specific humanizers. You may want to try them out in your favorite AI detectors before running them in big projects.

Comparing Key Features of Leading Humanizer Tools

Not all humanizer tools are created equal. Some hide the AI origin of your text, some facilitate your text being kept clean, others permit the tweaking of tone and language.

This has to do with cost as well as usability.

But it’s up to you, and really, it comes down to what you need- just don’t forget about that meaning and/or quality while trying not to get caught.

Bypass Rate and Detector Compatibility

The bypass rate captures the frequency with which a tool is able to elude the detection capacities of AI like GPTZero , Turnitin and others. The top humanizers make 95% and higher now, and some even 99%.

This is problematic because most detectors cannot easily detect rewritten text. It may also be useful that these tools select multiple detectors, in order to guarantee broad coverage.

For example, WriteHybrid and Undectable AI produce generally effective output on multiple platforms. But don’t rely on tools that are slyly changing your meaning – accuracy counts as much as stealth.

Readability, Natural Flow, and Authenticity

Humanized text should not sound stilted. A good tool preserves the meaning of the original message and does not render it awkward or confusing.

This contributes to the content remaining genuine and compelling. The best humanizers maintain the reading level as high as possible, adjusting the sophistication of words and simplicity of sentences according to the audience.

By natural flow I simply mean sentences link together well and it reads like a human produced it. Good content is that which does not sound so robotic that it roars “AI”.

You can then test the output for yourself and see if it aligns with your brand and sounds non robotic.

Tone Customization and Multilingual Support

There are even some tools that give you a choice of the tone to use; formal, casual, emotional… you name it. It is also this flexibility that allows your content to resonate with the reader.

Multilingual support is a large plus as well. Humanizer.org offers over 50 languages which is practical if you’re going to have international readership.

Examples of tone might be academic, marketing, creative. The voice can be tweaked but keeping it real. To be fair, more customization does often come with a higher sticker price, or even a subscription-based model.

Consider if you actually need those extras or if a basic plan works for you.

Ease of Use and Pricing Considerations

It DOES matter, and a tremendous amount at that. A good tool will have a simple interface and will process text quickly (usually under five seconds even for large chunks of text).

Free trial words, color coded edits, and easy signups make things easier. Prices range anywhere from a free plan with daily word limits to monthly subscriptions starting at about $7-20.

More words, additional modes, or faster speeds are unlocked in the higher tiers . The choice will be dependent on your budget and how much you write.

However, if you are more casual, the free plan at Humanize AI, 200 words a day, may be sufficient. Pros will likely desire high quality versions with more features and support.

The less expensive options won’t have all of the bells and whistles, and you might max out on output size or customization.

Strategies for Humanizing AI Text Effectively

If you want real content that the AI won’t pick up on, this might mean adding some personality, restructuring for better flow, and giving unique pieces of information. This is how you transition AI content from being mechanical to being engaging.

Editing for Personal Voice and Style

One large leap is training AI to edit its text so that it actually sounds like you. Vary your words and rhythms to suit your voice.

It’s less mechanical with tone and phrasing, because writing assistants can take care of that. Incorporate your editorial idiosyncrasies or idioms or vernacular expressions – this makes the text come alive.

Be consistent in your style, whether that style be informal or formal. And don’t worry too much about a flawless grammar or patterns, because in general AI tends to overdo them.

Building Human-Like Structure and Flow

What makes writing human-like is a combination of sentence lengths as well as the smoothness of transitions. The majority of sentences are easier to read when disjointed into smaller sentences.

The deployment of words such as “however,” “for example,” or “in addition” provide the text with a more natural cadence. Narrow paragraphs, but not extreme uniform ones.

Throw in some questions and direction to make it interesting. This type of diversity is often overlooked in AI content and restructuring the format is necessary.

Injecting Originality and Unique Details

If you want to fool mechanisms like Originality.ai, you must be personal. Throw in some unique facts, examples, or quirky insights that only you would have.

Anecdotes or absurdly specific pieces of information? Those are what set your writing apart and, frankly, are difficult for any AI to mimic.

Throw in the occasional seemingly personalized commentary or explanation . It has an authenticity like a real person just sat and typed it.

Oh and hey, please, let’s not recycle those canned AI lines. They are a surefire sign.

Ensuring Ethical Use and Compliance

Are you using a humanizer to avoid being detected by AI? Why bother, if you’re not going to take into consideration quality and honesty. There is a fine line between wanting to be original and having your point get across.

Avoiding Plagiarism and Maintaining Quality

Here, more attention needs to be paid to producing plagiarism-free content. In other words, it’s more than just getting over on a detector; the work must be original and well researched so that it is actually relevant.

Humanizer tools will assist in cleaning up your language, but they must not be a substitute for your ideas and or facts. And it’s lazy and, for me at least, it shows.

Editing and review are a big deal. Check the details and flow and feel free to substitute other words or rearrange your sentences. Regardless, do not settle for anything but the truth.

Short cuts generally are boomerangs. If you begin to copy, or recycle work in any way, shape or form, someone will find out. Always double-check your sources and give credit where credit is due. Aside from that it’s also a question of ethics: respect for intellectual property means that people will trust you down the line.

Transparency and Disclosure Best Practices

Using AI or humanizer tools should be explicitly identified. You don’t need to reveal all the nitty gritty details, but tell people if AI had a major role.

Transparency from the beginning about AI participation in a project also preempts claims of plagiarism or other improprieties. It demonstrates that you don’t fear these type of tools as part of the creative process.

Individuals and organizations should develop disclosure policies based on what is appropriate for their audience. Perhaps it’s a brief note on editing, or some disclosure on what lines of text were altered by a human and which by a computer.

Transparency, in the end, is simply polite. People have a right to know how something was made and it helps you to maintain credibility.

Bypass AI Detection Ethically-Frequently Asked Questions

Enough of the specifics. They are as follows, tips to make automated systems at least sound more like, well, humans. They’ll touch on how to avoid detection, the useful devices, and the ethical stuff as well.

What methods are effective at making automated interactions appear human?

Vary your sentences. At some points keep it short and punchy, then at some points surprise them by giving them a longer thought . Add a personal comment or rhetorical question- don’t make it completely inflexible.

What steps can one take to ensure AI detectors do not flag human-like automated behavior?

It has massive manual editing. Vary the tone, correct for grammar, and develop your own style. Avoid using the same terms, and combine the AI-generated content with your own writing to keep things fresh.

Are there legitimate applications for tools that make bots seem human?

In practice, “Yes and you do this for readability, privacy, and to adhere to the platform’s guidelines”, so that your computer generated content doesn’t feel like a boring computer generated advertisement to marketers or like a computer generated text to readers, to teachers, etc.

What are the ethical considerations when using technologies to mimic human behavior?

This is where transparency really counts. No one likes to be tricked – this is true in academic or professional context. If you used a bot, please say so.

How can pattern variation be introduced in automated processes to evade AI detection?

Try mixing sentence length and structure, making your work less predictable. If it feels right, throw in some regional slang, cultural tidbits, or even bullet points. Anything outside of the box can let you slip through the cracks.

What current technologies are known to successfully reduce the likelihood of AI detection?

Humanizer AI text generators are not bad at all. They adjust tone, fiddle with sentence construction, and replace words so that they more closely resemble something a natural person may say.

One thinks of services like aihumanizerapp.com – used in order to bypass even more sophisticated AI detectors like GPTZero. Not perfect, but for now these are tools that appear to have some impact.